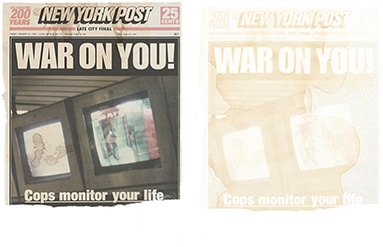

Right: Kim Piterrs, Fuck the Police, 2009. “Yellow-toned” X-ray radiography of Fuck the Police, 2005.

Images courtesy of the Saatchi Gallery, London. Photos by Zarko Vijatovic.

Big Data hopes to liberate us from the work of self-construction—and justify mass surveillance in the process.

Given what we now know about the NSA and Google and Verizon and Acxiom and all the other components of the Big Data surveillance machine, it seems natural to see in this infrastructure the long-prophesized realization of Big Brother. It makes for a recognizable fear, comforting in its familiarity. But it is also misleading. If media scholar Mark Andrejevic’s most recent book, Infoglut: How Too Much Information Is Changing the Way We Think and Know, is right, the overt and explicit appearance of Big Brother serves only to conceal the possibly more troubling fact of his ultimate indifference.

That’s not to say we are not being watched. Surveillance has become as thorough as technology permits it to be, as the legal restraints devised to limit it in earlier eras have become outmoded, irrelevant. Since many people are becoming alert to the surveillance threat because of smartphones' tracking us individually, we tend to imagine the problem with surveillance is that it can single us out and expose us. Surveillance is conceived as a kind of panoptic voyeurism that selects us for unfair scrutiny and treatment, requiring us to adopt a superficial conformity as camouflage. It plays on our worry for our personal reputation.

But Andrejevic argues that in an era of mass surveillance, the surveillance apparatus doesn’t care about our individual story. Instead Big Data is interested in broader statistical profiles of populations. Mass surveillance controls without necessarily knowing anything that compromises any individual’s privacy. To the degree that they have access to the devices we use to mediate our relation to everyday life, companies deploy algorithms based on correlations found in large data sets to shape our opportunities—our sense of what feels possible. Undesirable outcomes need not be forbidden and policed if instead they can simply be made improbable. We don’t need to be watched and brainwashed to make them docile; we just need to be situated within social dynamics whose range of outcomes have all been modeled as safe for the status quo. It’s not: “I see what you are doing, Rob Horning, stop that.” It’s: “Rob Horning can be included in these different data sets, which means he should be offered these prices, these jobs, these insurance policies, these friends’ status updates, and he’ll likely be swayed by these facts.”

This form of control is indifferent to whether or not I “believe” in it. I can’t choose to opt out or face it down with a vigilant skepticism. I can decide to resist—I can toss my smartphone in a lead bag and refuse all social media—but these decisions won’t affect the social infrastructure I am embedded in. At best, such self-abnegation will register only as a marker of my quirky noncomformity. It may only supply consumerism with new symbols of rebellion to market.

As it has become feasible to scale surveillance up, the institutional logic of surveillance, Andrejevic argues, has turned itself inside-out. What mass data collectors want, be they the state agencies trying to ensure stability or commercial entities hoping to mine for marketing insight, is not potentially incriminating information about you as an individual so much as mundane information at the scale of populations. The macro-level goal is to construct a working model of society in data, so the more conforming you are, the more you need to be watched, to weight the models properly:

In population-level intelligence-gathering, authorities count on the fact that the vast majority of those details are collected are innocent because it is against this background of unsuspicious or normal activity that the anomalies of deviant and particularly threatening behavior are meant to emerge.

Since the data of average people establishes the backdrop statistics of normality, being a compliant citizen makes essential to surveillance rather than exempt. This level of surveillance is thus no longer intended to enforce discipline, as in a panoptic model of social control. Instead it aspires to sink into the hum of technologically mediated everyday life. You get watched not because you’re a “person of interest” but because you’re probably already perfectly ordinary.

This approach to mapping the social in data works not only for crime detection but also for targeted marketing. Data patterns that indicate a life transition (death in the family, divorce, pregnancy, job loss, relocation—all of which render one especially vulnerable to advertising) can be detected in among one group and exploited across the groups that have similar statistical profiles, in degrees calibrated to the level of correlation. Since this form of surveillance is not particular to individuals, whether the data is “anonymized” doesn’t mitigate the sorts of intervention it can warrant. Correlations amid anonymized data still “can be used to shape the opportunities available to and the interventions visited upon others,” Andrejevic notes. Under these conditions, trying to protect individual privacy through making collected data “anonymous” becomes an irrelevant tactic.

Surveillance has aspired to become as ordinary as the population it seeks to document. Social media, smartphones, wearable trackers like Fitbit, and other interlocking and ubiquitous networks have made surveillance and social participation synonymous. Digital devices, Andrejevic notes, “make our communication and information search and retrieval practices ever more efficient and frequent” at the cost of generating a captured record of it. Surveillance thereby is linked not with suspicion but with solicitude. Each new covetable digital service, he points out, is also at once a new species of data collection, creating a new set of background norms against which to assess people.

Thanks to the services’ ongoing proliferation, it has become increasingly inconvenient to take part in any social activity—from making a purchase to conversing with friends online to simply walking down the street—that doesn’t leave permanent data traces on a privately owned corporate server. The conveniences and connectivity promised by interactive technologies normalize what Andrejevic calls “digital enclosure”—turning the common space of sociality into an administered space in which we are all enlisted in the “work of being watched,” churning out information for the entities that own the databases.

The transformation of social behavior into a valuable metacommodity of marketing data has oriented communication technology toward its perpetual collection. We become unwitting employees for tech companies, producing the data goods for the companies’ true clients: companies who can process the information.

With the way surveillance is shaped and excused as interactive participation, we experience it not as a curtailment on our privacy so much as information overload: Each demand for more information from us comes joined with a generous provision of more information to us. Much of the surveillance apparatus provides feedback, the illusion of fair exchange. For instance, we search Google for something (helping it build its profile of our what we are curious about, and when and where) and we are immediately granted a surfeit of information, more or less tailored to instigate further interaction. What is known about us is thereby redirected back at us to inform us into submission.

Purveyors of targeted marketing often try to pass off these sorts of intrusion and filtering as a kind of manufactured serendipity. Andrejevic cites a series of examples of marketing hype inviting us to imagine a world in which retailers know what consumers want before the consumers do, as though this were a long-yearned-for miracle of convenience rather than a creepy effort to circumvent even the limited autonomy of shopping sovereignty. “In the world of database-driven targeting,” Andrejevic argues, “the goal is, in a sense, to pre-empt consumer desire.”

This is a strange goal, given that desire is the means by which we know ourselves. In hoping to anticipate our desires, advertisers and the platforms that serve ads work to dismantle our sense of self as something we must actively construct and make desire something we experience passively, as a fait accompli rather than a potentially unmanageable spur to action. Instead of constructing a self through desire, we experience an overload of information about ourselves and our world, which makes fashioning a coherent self seem impossible without help.

If Big Data’s dismantling the intrinsic-self myth helped people conclude that authenticity was always an impossibility, a chimera invented to sustain the fantasy that we could consume our way to an ersatz uniqueness, that would be one thing. But instead, Big Data and social media foreground the mediated, incomplete self not to destroy the notion of the true self altogether but to open us to more desperate attempts to find our authentic selves. We are enticed into experiencing our “self ” as a product we can consume, one that surveillance can supply us with.

The more that is known about us, the more our attention can be compelled and overwhelmed, which in turn leads to a deeper reliance on the automatic filters and algorithms, a further willingness to let more information be passively collected about us to help us cope with it all. But instead of leading to resolution, a final discovery of the “authentic” self, this merely accelerates the cycle of further targeted stimulation. The ostensible goal of anticipating consumer desire and sating it in real time only serves the purpose of allowing consumers to want something else faster.

So as surveillance becomes more and more total, Andrejevic agues, we experience our increasingly specified and information-rich place in this matrix as confusion, a loss of clarity or truth about the world and ourselves. Because excess information is “pushed” at us rather than something we have to seek out, we are always being reminded that there is more to know than we can assimilate, and that what we know is a partial representation, a construct. Like a despairing dissertation writer, we cannot help but know that we can’t assimilate all the knowledge it’s possible to collect. Each new piece of information raises further questions, or invites more research to properly contextualize it.

Ubiquitous surveillance thus makes information overload everyone’s problem. To solve it, more surveillance and increasingly automated techniques for organizing the data it collects are authorized. In a series of chapters on predictive analytics, prediction markets, and body-language analysis and neuromarketing, Andrejevic examines the variety of emerging technology-driven methods meant to allow data to “speak for itself.” By filtering data through algorithms, brain scans, or markets, an allegedly unmediated truth contained within it can be unveiled, and we can bypass the slipperiness of discursive representation and slide directly into the real. Understanding why outcomes occur becomes unnecessary, as long as the probabilities of the correlations hold to make accurate predictions.

This modern-day phrenology is the “end of theory” that Chris Anderson famously announced in an oft-cited 2008 Wired story that argued that the scientific method is “obsolete” in the “petabyte age” of massive data sets. Because big data sets turn up correlations beyond our ability to explain them, the logic of explanation is being rewritten culture wide. As Andrejevic notes, “An era of information overload coincides ... with the reflexive recognition of the constructed and partial nature of representation.” Authoritative accounts of phenomena become contingent, vulnerable to accusations of being slanted in their incompleteness.

The doubt about narratives plays into the hands of what Andrejevic calls the “postmodern right,” which seizes on the idea that “not only are all truths constructed”—a centerpiece of the critiques of power that emerged from the left in the 1960s and ’70s—“but they are nothing more than constructions,” with no possible basis in fact or causal explanation. Nothing is true and everything is political. Any set of standards that could arbitrate between compet- ing explanations can be exposed as a higher level of bias. So anything one might not want to accept—global warming, endemic sexism and racism, structural unemployment, or really any theory about an aspect of social life—can never be sufficiently demonstrated and can always be dismissed as an incomplete account, a biased curation of information.

The same is true of the self. The vast pool of data about us, combined with the social media to circulate and archive it, makes it hard to escape the sense that any partial representation of ourselves is not the whole truth, is somehow “inauthentic” and easily disprovable with more data. Developing a personal narrative, a life story, can feel futile and outmoded—ineffective at what sociologist Erving Goffman calls “front stage management,” as well as being demonstrably incomplete. So instead we may turn to data management strategies—algorithms and networked recommendations and predictive analytics—not only to understand the world but to understand ourselves. These let us, in Andrejevic’s words, “bypass or short-circuit the problem of comprehension and the forms of discursive, narrative representation upon which it relies.”

Individual doubt about being able to process all the information about ourselves, stoked by media entities capable of overloading us with information that will seem relevant to us, provokes a surrender to the machines that can. Instead of inventing a dubious, distorted, inauthentic life story to make sense of our choices, we can instead defer to something that supposedly can’t be faked: data. Big Data benefits by persuading us that we are the least trustworthy processors of data about ourselves. The degree to which we believe our own life stories are unreliable, to others and to ourselves, is the degree we will volunteer more information about ourselves to data miners for processing.

In the era of post-truth, critiquing power as domination through ideological narratives collapses into an endless critique of critique, in which any dispute reveals only that everything is disputable. Andrejevic argues that the powers that be use the “expanded media space to engulf any dominant narrative in possible alternatives, to highlight the indeterminacy of the evidence by promulgating endless narratives of debunkery ... to suck critique into the clutter blender” and “highlight the contingency, indeterminateness, and, ultimately, the helplessness of so-called truth in the face of power.” Whoever already has power can’t be challenged by rational arguments, which can’t escape accusation of bias through omission, if nothing else.

In the absence of an agreed-upon protocol to arbitrate between competing stories, everyone may feel entitled to their own version of the truth, and any empirical attacks on these “truths” can feel personal attacks on one’s taste and autonomy. Andrejevic points this out in discussing how Fox News maintains its implausible reputation for credibility in surveys of news consumers. But these personal versions of truth are no more concocted in isolation than one’s musical taste; they are more like refractions of the cultural zeitgeist, an emotional climate of what feels true.

Adjusting that emotional climate for various niches becomes a shortcut to social control: By adjusting the “buzz volume” about certain notions (i.e. “Obama is not a legitimate President,” “organic food tastes better,” “job creators are ‘on the sidelines’ because of economic uncertainty”), they can become received wisdom rather than dubious assertions subject to empirical evaluation. And ubiquitous data collection and media consumption means that specific types of appeals can be targeted at or filtered out for particular audiences in algorithmically driven efforts to mold their feelings. If successful, the affect can then spread socially from these converts through some of the same channels, creating the desired mood fog.

This is why defying the prevailing currents of ideology at the individual level doesn’t constitute meaningful resistance. We no longer are controlled through learning ideological explanations for why things happen and what our behavior will result in. Instead, we are constructed as a set of probabilities. A margin of noncompliance has already been factored in and may in fact be integral to the containment of the broader social dynamics being modeled at the population level. Expanding the scope of individual agency doesn’t disrupt the control mechanisms enabled by Big Data; it may in fact be a by-product of that control’s efficient operation. We are ostensibly free to believe what we want, but then, Andrejevic argues, “Freedom consists of choosing one’s own invented version of history invoked for the purposes of defending the individuating logic of market competition.”

Since Big Data lumps people together on the basis of the statistical implications of actions they would never bother to consciously correlate, they are left to essentially do what they please within confines they cannot perceive. So we may feel liberated from indelible typecasting by our consumer choices by liking a certain kind of music or wearing a certain sort of clothes. Self-conformity is not necessary to identity in the age of the malleable archive, the generative database.

As the recent Facebok mood-manipulation study shows, social-media platforms aim to reshape our experience of the self in terms of what Andrejevic describes as “statistical proxies for affective intensities.” Correlations in data sets are used to shape user experiences (ostensibly to make their use more satisfying), which in turn feedback the behavior model- ing led the administrators to expect. You may never know that you have been affected by the discovery that, to use a speculative example Andrejevic offers, “someone who purchased a particular car in a particular place and buys a certain brand of toothpaste may be more likely to be late in paying off credit card debt.” But it will dictate your economic opportunities and thereby reinforce it’s “truth.” And because the members of these groups don’t even know they have been put together for purposes of control, they can’t form the sort of solidarity necessary to object to this mechanism of administration. “ The logic of aggregation is distinct from collectivity,” as Andrejevic notes, and may be deployed to militate against it.

Big Data promises a politics without politics. The trust necessary to ratify explanatory narratives is displaced from the seemingly intractable debate among competing interests and warped into a faith in quasi-empirical mechanisms. Yet the idea that a higher level of objectivity exists at the level of data is itself a highly biased conception, a story told to abet capitalist accumulation. If unexplained correlations are politically or commercially actionable—and in capitalist society, profit arbitrates that—they will be de- ployed. The correlations that pay will be true, the ones that don’t will be discarded. Profit becomes truth. (This is especially true of prediction markets, in which truth is literally incentivized.) Making money becomes the only story it is possible to convincingly tell.

The denigration of objectivity joined with an improving facility at manipulating the emotional milieu leads to a nightmare political scenario that Andrejevic outlines:

This asymmetry [of control of databases] would free up politicians to engage in “infoglut” strategies in the dis- cursive register (promulgating reports that contradict themselves endlessly, pitting “expert” analysts against one another in an indeterminate struggle that does little more than fill air time, or perhaps reinforce preconceptions) while simultaneously developing new strategies for influence in the affective register. Fact-checkers would continue to struggle to hold politicians accountable based on detailed investigations of their claims, arguments, and evidence, while politicians would use data-mining algorithms to develop impulse- or anxiety-triggering messages with defined probabilities of success.

Far from being neutral or objective, data can be stockpiled as a political weapon that can be selectively deployed to eradicate citizens’ ability to participate in deliberative politics.

Many researchers have pointed out that “raw data” is an oxymoron, if not a mystification of the power invested in those who collect it. Subjective choices must continually be made about what data is collected and how, and about any interpretive framework to deploy to trace connections amid the information. As sociologists Kate Crawford and danah boyd point out, Big Data “is the kind of data that encourages the practice of apophenia: seeing patterns where none actually exist, simply because massive quantities of data can offer connections that radiate in all directions.”

The kinds of “truths” Big Data can unveil depends greatly on what those with database access choose to look for. As Andrejevic notes, this access is deeply asymmetrical, undoing any democratizing tendency inherent in the broader access to information in general. In his 2007 book iSpy: Surveillance and Power in the Interactive Era, he argues that “asymmetrical monitoring allows for a managerial rather than democratic relationship to constituents.” Surveillance makes the practice of “making one’s voice heard” basically redundant and destroys its link to any intention to engage in deliberative politics. Instead politics operates at the aggregate level, conducted by institutions with the best access to the databases. These data sets will be opened to elite researchers and the big universities that can afford to pay for access, Crawford and boyd point out, but everyone else will be mostly left on the sidelines, unable to produce “real” knowledge. As a result, institutions with privileged access to databases will have ability to determine what is true.

This plays out not only with events but also with respect to the self. Just as politics necessarily requires interminable intercourse with other people who don’t automatically see things our way and who least acknowledge alternate points of view only after protracted and often painful efforts to spell them out, so does the social self. It is not something we declare for ourselves by fiat. I need to negotiate who I am with others for the idea to even matter. Alone, I am no one, no matter how much information I may consume.

In response to this potentially uncomfortable truth, we may turn to the same Big Data tools in search of a simpler and more directly accessible “true self,” just as politicians and companies have done. Identity then becomes a probability, even to ourselves. It ceases to be something we learn to instantiate through interpersonal interactions but becomes something simply revealed when sufficient data exists to simulate our future personality algorithmically. One is left to act without any particular conviction while awaiting report from various recommendation engines on who we really are.

In this sense, Big Data incites what Andrejevic, following Žižek, calls “interpassivity,” in which our belief in the ideology that governs us is automated, displaced onto a “big other” that does the believing for us and alleviates us of responsibility for our complicity. Surrendering the self to data processors and online services make it a product to be enjoyed rather than a consciousness to be inhabited.

The work of selfhood is difficult, dialectical, requiring not only continual self-criticism but also an aware- ness of the degree to which those around us shape us in ways we can’t control. We must engage them, wrestle with one another for our identities, be willing to make the painful surrender of our favorite ideas about ourselves and be vulnerable enough to becoming some of what others see more clearly about us. The danger is that we will settle for the convenience of technological work-arounds and abnegate the duty to debate the nature of the world we want to live in together.

Instead of the collective work of building the social, we can settle for an automatically generated Timeline and algorithmically generated prompts for what to add to it. Data analysts can detect a correlation between two seemingly random points—intelligence and eating curly fries, say, as in a 2012 PNAS research paper by Michal Kosinski, David Stillwell, and Thore Graepel that made the rounds on Tumblr and Twitter in January—and potentially kick off a wave of otherwise inexplicable behavior. “I don’t know why I am eating curly fries all of a sudden, but that shows how smart I am!” Advertisers won’t need a plausible logic to persuade us to be insecure; they can let spurious data correlations speak for them with the authority of science.

Unlike the Facebook mood-manipulation paper, the curly-fries paper enjoyed a miniviral moment in which it was eagerly reblogged for its novelty value, with only a mild skepticism, if any, attached. This suggests the seductive entertainment appeal these inexplicable correlations can provide—they tap the emotional climate of boredom to spread an otherwise inane finding that can then reshape behavior at the popular level. We’re much more likely to laugh about the curly fries paper and pass it on than to absorb any health organization’s didactic nutrition information. Our eagerness to share the news about curly fries corresponds with our willingness to accept it as true without being able to understand why. It’s WTF incomprehensibility enhances its reach and thus its eventual predictive power.

Likewise, the whimsical reblogging of the results from patently ridiculous online tests hints at how we may opt in to more “entertaining” solutions to the problem of self. If coherent self-presentation that considers the need of others takes work and a willingness to face our own shortcomings, collaborating with social surveillance and dump ing personal experience into any and all of the available commercial containers is comparatively easy and fun. It returns to us an “objective” self that is empirically defensible, as well as an exciting and novel object for us to consume as entertainment. We are happily the audience and not the author of our life story.

Thus the algorithm becomes responsible for our political impotence, an alibi for it that lets us enjoy its dubious fruits. By trading narratives for Big Data, emotions are left with no basis in any belief system. You won’t need a reason to feel anything, and feeling can’t serve as a reliable guide to action. Instead we will experience the fluctuation of feeling passively, a spectator to the spectacle of our own emotional life, which is now contained in an elaborate spreadsheet and updated as the data changes. You can’t know yourself through introspection or social engagement, but only by finding technological mirrors, whose reflection is systematically distorted in real time by their administrators. Let’s hope we don’t like what we see.