The algorithm is your new boss and the factory is everywhere

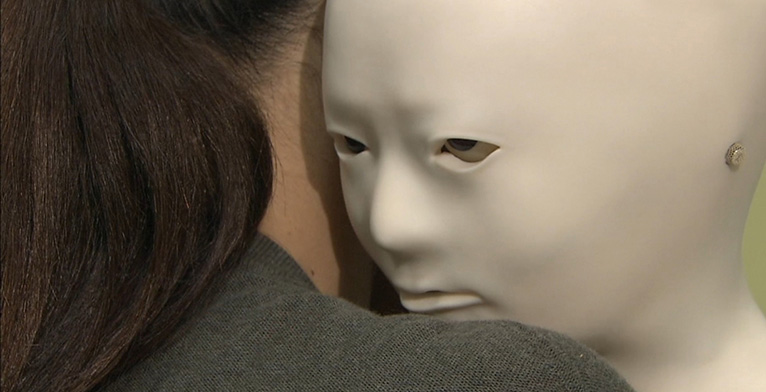

THE dystopian prospect of being enslaved to artificial intelligence has arrived. Sort of. The sensational fantasies of machine domination have been replaced with a banal reality: The AI isn’t a murderer, it’s an insecure boss who needs constant reassurance that its jokes are funny.

In August of last year, Facebook announced the launch of a new service called M, “a personal digital assistant [...] that completes tasks and finds information on your behalf.” However, unlike similar offerings from Apple and Google, which are fully automated, M relies on both machine-generated answers and human labor to respond to queries. M is “powered by artificial intelligence that’s trained and supervised by people”(Dave Marcus, VP of Messaging Products). This practically means that every auto-generated response M makes to users is then vetted (and potentially adjusted) by a human employee. A Wired article on M explains the workflow: “...the AI can do most of the work for simpler tasks, like telling a joke. It’ll query an Internet joke API--a service that supplies jokes--and a trainer will approve the joke if it’s funny.”

As employees vet and tweak M’s responses, they are also providing valuable data that shapes Facebook’s automated system, training it to offer better answers in the future. These customer-service workers therefore have two bosses: Facebook M users and Facebook M itself. To users of the system, Facebook’s workers provide a low-cost personal concierge service, filling in the gaps that the machine can’t handle. But more importantly, they act as personal tutors for Facebook M itself. Every time Facebook employees approve or adjust the system’s automated responses, they improve not just the particular response but the system as a whole, giving Facebook a potential edge against competing services, and expanding the dataset that will inevitably, and ironically, render their own jobs obsolete. The more they work, the more precarious their position becomes and the better they set the stage for their own liquidation. M’s approach to automation, where humans work for algorithms, makes explicit the exploitative dynamic that exists in all machine learning systems.

Machine learning is an umbrella term that encompasses a set of techniques for getting computers to do things without having to explicitly give them instructions. Unlike typical computer programs that perform a specific set of operations in the same way unless a programmer adjusts the code, machine learning programs generate their own rules for operating and have the capacity to improve themselves over time.

Until recently, storing and processing data at the scale required for large-scale machine learning, was impossible. Because they create highly specific models of reality determined by the data they are fed, machine learning systems require massive datasets to both initialize the system, and then (often) to evolve it. The algorithm recommends a movie, tells a joke, drives a car, captions a photo--and both the success and failure become data then used to change the algorithm one way or another.

For tech giants like Facebook and Google, machine learning forms a core part of their business model. For example, Facebook uses machine learning to continually adjust what you see in your news feed by using your past activity to make a prediction about what you’re interested in. It adjusts itself based on how you respond to the content it feeds you--did you “like” it? Did you click the link? Google uses machine learning to determine search results, optimize advertising and so on. However, as a system for making money, machine learning is distinct from more traditional methods, both virtual and physical. Its reliance on exploiting human-generated data that we wittingly or unwittingly give away fundamentally alters both what it means to work, and what it means to be an employee. As such, machine learning systems can be understood as a means of production: they act as factories producing economic value on behalf of the companies that control them. They also exploit us while doing so.

In certain scenarios, this relationship is direct and undeniable, while in others, it is less clear-cut. Uber, for example, is currently in the very early stages of moving from human drivers to automated drivers, and has deployed a small fleet of autonomous vehicles in Pittsburgh. The machine learning system powering these cars was undoubtedly produced from data generated by Uber's current human drivers. Every time a driver completes a trip, they provide valuable data via their smartphones that Uber then leverages as a training set for future automated replacements. However, exploitation does not always take the form of humans literally teaching computers to replace them.

Whenever we interact with software/websites/businesses built using machine learning systems, we provide data that trains and/or improves those systems. The frequently quoted line that “if you are not paying for it, you're not the customer--you're the product being sold” should be amended: you’re no longer just the product, you’re also the unpaid laborer. If we understand work as any human activity that generates wealth, and our interactions with machine learning systems as incremental contributions to the means by which companies make money, then we are in effect laboring without compensation nearly every time we use the Internet. Machine learning systems are factories of production, and we are always working on improving the factory.

The goal of AI systems is to produce outputs that outperform human counterparts. The recent advances in machine learning techniques such as the “deep learning” that powers Google’s AlphaGo robot, have led to systems able to accomplish human-like tasks that were once theorized to be virtually impossible in computer science. Both the breathless enthusiasm and paranoid speculation surrounding these advancements center around the idea that machine capacity will exponentially overtake human capacity. This event, typically called “the singularity,” is seen as either apocalyptic or as liberating by those who believe it is inevitable.

However, it doesn’t really matter if a machines-take-over-the-world event actually occurs. Both the predicted apocalyptic and liberatory outcomes have already come to pass. When we understand machine learning and AI to be means of production, it becomes evident that automated systems have already produced both realities: one for those who control and benefit from the means of production, and one for those who labor on the means of production and are excluded from its benefits.

Nearly anything we do that is somehow trackable or legible as data can be understood as uncompensated labor that trains, or could potentially train, a machine. Even if particular clicks, likes, or page views have not yet been plugged into algorithm optimization, the data we collectively generate can be deployed at any time as a training set for proprietary systems that we have no collective control over. And, even when workers are paid for data that trains machines--as was likely the case in systems like Siri, and is a frequently occurring request on digital labor marketplaces like Amazon’s Mechanical Turk--their employment ends the moment enough data has been collected to complete the training. The resulting autonomous system, however, can continue to generate wealth indefinitely.

In all of this, the issue at hand is not the technology itself but who controls and benefits from it. In the paradigm of machine learning, data itself is labor, and the displacement of humans by automated systems, an inevitable consequence. Regardless of whether we train machines willingly or unwillingly, with or without compensation, the eventual outcome is always a money-making machine--and the relationship between the owners of that machine and the laborers who built it is always one of severe exploitation, since the laborers have not only built a product, they have also built a product-building automaton.

There is nothing fundamentally wrong with automatons taking over human labor. In fact, it is a desirable outcome if the automations are collectively owned or controlled by the labor force they replace. The tragedy of automation and AI taking over, the fear of the “singularity," is actually just the realization of a fundamental characteristic of capitalism: those who don't control the means of production will always be excluded from the benefits of their labor.