An audio version of this essay is available to subscribers, provided by curio.io.

An audio version of this essay is available to subscribers, provided by curio.io.

2. Capital D is used to signify Deaf as an identity and community, and it’s important to note some Deaf people do not identify as disabled.

In my interviews with a group of friends and colleagues about assumptions in technology design about their disability, the most common experience was of exhaustion. New technologies often require the hard work of people who use them and who have to adjust to continuous updates. Heather Vuchinich, who is a writer and astrologer, says that closed captions on television and movies “are often of poor quality, too small, or unavailable.” More important, she notes, is “the psychological effects of depending on technology for something ‘normal’ people take for granted.” Vuchinich sometimes jokes that she’s part “cyber” because of her dependence on hearing aids, but this sense of being cyber can also mean feeling subhuman.

Even tech supposedly designed to “help” disabled users can sometimes discriminate against disabled people. A web browser can easily detect when someone is using a screen reader and direct them to limited functionalities specifically designed for blind users, even if they haven’t consented to being channeled to a separate user experience or asked for or wanted such functions. Leona Godin, a writer who holds a PhD in 18th-century English literature, reports that when she accesses library websites they continually suggest she use a library for children and nonacademic readers who are blind. “To assume that a blind person is not also a scholar needing to access the full catalogue feels a little insulting.” There’s a pacifying work that the word ‘inclusion’ does, rendering users as a generalized body of people without regard for the proliferation of varying identities and needs under the banner of “disabled.”

This is because even inclusive technology is still often guided by the belief that disability must be cured. Cure is goal oriented, causal and finite. Cure assumes that when a user is in one state, typically illness or impairment, they can get to the other state, a complete negation of their previous one, through technological or medical means. This assumes the given state only exists as a problem to be solved, and that its negation is an obvious moral good. While the narrative of cure applies to people who are ill or temporarily impaired, disabled people need a different conceptual framework. Even with the most advanced technology, disability can not and—sometimes should not—disappear from people. There are disabled people whose relationship with their own bodily functions and psychological capabilities cannot be considered in a linear movement from causation to result, where narratives of technology as cure override the real varieties in people’s needs and conditions and falsely construct binary states—one or the other, abled or disabled—shadowing everything between or outside of those options.

Many members of the Deaf community take pride in their identity, and in advocating for their rights. The idea of cure can lead to the erasure of identity, giving a false promise that technology can solve a cultural problem of barriers that exclude disabled people. In the 2008 documentary Examined Life, artist and activist Sunaura Taylor explains the difference between impairment, which she describes as “our own unique embodiments,” and disability, which is “the social repression of disabled people.” Disability, in this light, is a condition of a society that disables people. As an ableist social construct of what a normal body is and does, technology framed as cure harms disabled people who are more vulnerable to breaches to and compromises of their privacy. Narratives of technology as a solution for people to overcome their disability thus reinforce oppressive ideas like what constitutes a socially acceptable body. Such narratives in turn take space away from the public imagination about what needs to be done to abolish ableist thinking.

Care, in contrast to cure, is a form of stewardship between people who support each other in communication, action, and social engagement. It is actualized by extending one’s mindfulness of another person’s dignity and feelings, while respecting their independence. Care is made possible when parties are mutually accountable for each other’s well-being. Caring differs from an explicit division of power and is not a transfer of the decision-making process, because it is based on a sense of interdependence, which is a free exchange that cannot be contracted or automated. If the narratives of technology-as-cure focus on the explicit, obvious, visible conditions of enhancement, technology-as-care focuses on more implicit, less visible conditions that are difficult to identify. Technology can be used for care when it buttresses our autonomy and does not assume our decisions in advance. While machines can learn, automate, and execute certain tasks faster than humans, human deliberation—a subtle murmuring or hesitation—is often a sign of deep thinking. This deliberation should not be seen as a matter of inefficiency but rather as a sign of care that cannot be automated. The slowness may be the integral element of human agency.

Artificial Intelligence, on the other hand, is often framed as the latest solution to complex problems, hyped and tossed around conveniently in all situations due to its ontological expansiveness. But systems of AI are already deeply embedded in our most mundane electrical communication, from what’s surfacing on your Facebook timeline, to search suggestions on Google and so on. AI can be broken down into several parts: broad data collection that is fed into learning algorithms, which power “intelligent” automated decisions. The final point of “intelligence” requires attention. As researchers M. C. Elish and Tim Hwang write in a 2016 book, An AI Pattern Language, AI is essentially “a computer that resembles intelligent behavior. Defining what constitutes intelligence is a central, though unresolved, dimension of this definition.” AI’s intelligence most often manifests in the seemingly autonomous organization of information and its applications, for example, Internet advertisements that suggest products based on a complex user profile. Most Internet corporations collect a wide range of data in order to extract behavior patterns and predict future interaction. The algorithms that continually evolve models for organizing information are the basis of machine learning, which, according to M. C. Elish and Tim Hwang, “enable a computer to ‘learn’ from a provided dataset and make appropriate predictions based on that data.” The scale in which the model learns and transforms, along with the growing amount of data from people’s interactions with computational systems, poses new points for consideration.

There are innovations for disabled people being made in the field of accessible design and medical technologies, such as AI detecting autism (again). However, in these narratives, technologies come first—as “helping people with disabilities”—and the people are framed in subordinate relation to them. Alarmingly, narratives around AI often present it as a covert release from social responsibility. The field of precision medicine attracts a techno-optimist vision of tailored, on-demand medical services based on genetic data that presents itself as the alternative to the current “baseline average” medical service. An imaginary scenario includes preemptive medical intervention prior to the appearance of symptoms, based on algorithmic analysis of data that is aggregated and managed free of the conventional medical regulations. These narratives flatten the spectrum between cure and care, illness and disability, medical intervention and inclusive adaptation.

The new normals of impersonal, “intelligent” services may come with unseen repercussions. Machines learn, oftentimes with mistakes. When no person can be held responsible for the medical decisions made by AI, who is accountable for a clinical mistake or instance of discrimination? AI will have a material impact on who gets considered worth saving, reifying existing social hierarchies by further alienating those who are already excluded. AI can render disabled people invisible from the database, if they have communication disabilities, or unique body features or psychological capacities. Alternately, it can make disabled people hypervisible by profiling their identity and render them more vulnerable to threat, surveillance, and exploitation. Either way, AI performs tasks according to existing formations in the social order, amplifying implicit biases, and ignoring disabled people or leaving them exposed.

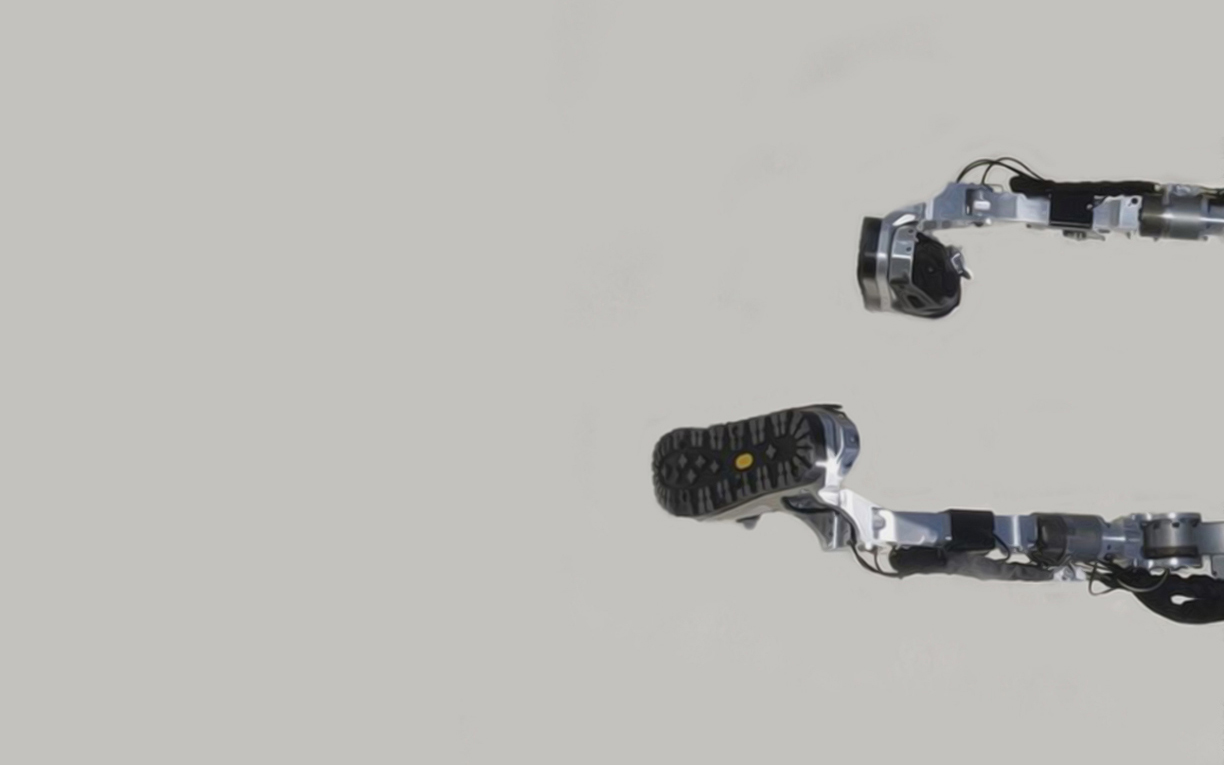

What kind of technological advances do disabled people really want, then? To start, they seek necessary representation in the innovation and design process. Too often, sign-language recognition systems try to be all-inclusive and in the process lose usefulness. Instead, architect Jeffrey Mansfield says, “AI that limits recognition to daily commands one might use while driving, watching TV, or changing thermostats . . . can be much more robust in our daily lives. Self-learning sign-language recognition from both first-person and third-person POVs would be useful, as well as self-learning, real-time automatic transcription for multiple speakers.” These ideas are grounded in the intricate life experiences of a Deaf person. Furthermore, Mansfield points toward the need for updated cultural narratives around technology as inherently more advanced: “People have assumed that YouTube auto-captioning or dictation offers adequate accessibility that can replace other, more analog forms of accessibility such as sign language interpreting or accurate captioning.” Mainstream narratives of new technology often set aside the social and civil advances that are still necessary to truly address the needs of disabled communities for practical access and the ability to decide what they want. Leona Godin suggests “a functional eyeball—I could wear it on my head or as a necklace or sunglasses, but if it told me what was happening in front of my eyeballs, my brain could take care of the rest." It’s important to note her insistence on retaining control of what she does with the added information. The kinds of prosthetics she wants would enhance her autonomy, not supplant it.

To empower disabled people through technology, Chancey Fleet, whose education practice focuses on accessibility, points to a need to help people become critical users, saying, “Emerging technology users may learn enough to succeed at immediately pressing tasks, but if they don’t progress to becoming critical users they are very unlikely to encounter the idea that their data and interaction with the system might be used in ways they don’t expect.” Fleet suggests that “if an application is looking at my browsing and purchase history and curating what it shows me in response, there should be a place where I explicitly allow or don’t allow that (like I grant microphone access, or don’t)." This is part of demystifying algorithms, one of the objectives in computer-programming workshops I’ve facilitated for people who are Deaf, Blind, or on the autism spectrum or who have a combination of disabilities. The end goal is, as dancer and choreographer Alice Sheppard proposes, “disability leading the design process as positive, generative artistic forces.” Artistic provocation, calls for political action, and technological research and development can come together as a conceptual framework. In respect of disabled people’s approach to technology, the scholar Meryl Alper, in her book Giving Voice: Mobile Communication, Disability, and Inequality, writes, “They are not passively given voices by able-bodied individuals; disabled individuals are actively taking and making them despite structural inequality.” Technology only becomes useful for people with disability through a combination of community support, societal acceptance of the varieties of disability, and appreciation of unique individual circumstances.

Given the power of technological narratives to influence our ideas of future societies, changing these narratives should lead to renewed social appreciation for disabled people. Change should occur first through the acknowledgement of disabled people as users, communities, and vital parts of the public. An appreciation of their full humanity would mean disabled people do not have to fight for what is available for nondisabled people by default. And there must be readily available opportunities for disabled people to design technology for themselves. Their everyday needs and imagination need to be at the forefront of design, not an afterthought in the name of benevolent inclusion that can render people unable to participate in the society. Shifting the narratives about technology-as-cure to technology-as-care can facilitate a dialogue about disability as a human condition for everyone to consider, not just the people with impairment. Technology-as-care is not considering disabled people as someone to be “cared by” others; rather, it is appreciation of disabled people’s tenacity and creativity to design technology that people can use to “care for” each other.