Would you date a robot? It seems pretty safe. The existence of pickup-artist systems like the ones described in Neil Strauss's The Game suggests that there is a prevalent fantasy of removing the risks from dating encounters by implementing the equivalent of computer code. The haphazardness of interpersonal chemistry and the daunting asymmetries of personal revelation are replaced with a program ("the game") that seems to comfort the pickup artist and the picked-up target alike. If you execute certain subroutines, you can rely on the calculable probability of certain tasks being completed according to established protocols and within established parameters.

Basically you treat the person being picked up like a blinking cursor on a command line, awaiting lines of programming, and that person in turn can enjoy the comforting experience of being programmed, of surrendering safely to a familiar script -- the same semi-passive thrills we experience when we surrender to a movie or a book, when we let it "program" us. We let serial TV shows, for example, put us in suspense about what are after all nonexistent characters whose fate is not autonomous but entirely sealed in advance. I don't remember exactly when this dawned me -- far too late, definitely -- but I started enjoying sad/sappy movies a lot more when I let myself cry when the movie seemed to expect it of me instead thinking I was somehow beating the system or proving my superiority by resisting it.

Fictions are engines for suspending our disbelief. If fictions are well-contrived, they let us reach that captivating state of surrendering skepticism. And if you don't want to suspend your disbelief, there is little point in consuming fiction. Suspending disbelief isn't merely the prerequisite for reaching some other affective destination; it is the destination, the pleasure itself.

Pickup artists are basically fiction writers working with the dating milieu as a medium. They provide genre entertainment. It's not for everyone, by any means, but it's a standard format that some people respond to and enjoy.

But I don't think many people mistake that sort of entertainment programming for a way to create love. Love is in many ways defined by escaping systematization. It registers in spontaneous moments, unexpected connections between people that neither ever would have thought to try to program that form the ineffable substance of intimacy. Love, as most people seem to see it, is supposed to individuate the partners, capture and reveal their personal uniqueness to the other, and the unfolding of love is the pursuit of more and more of those occasions that allow that uniqueness to express itself.

A real-life love story has to be as unique and unpredictable as the people involved imagine themselves to be. It's not about suspending disbelief and permitting for self-forgetting, but building belief and allowing for self-discovery, finding the wherewithal to believe that we have a "real" self and that it is valuable and compelling enough that someone else would want to know all about it. No one is "authentic" in isolation; you have to be authentic for someone else, who can confirm your genuineness. If you are alone being authentic, you are just being.

The practice of love, then, is a matter of attuning to these unique, "authentic" aspects of the other (or fabricating them collaboratively and positing them in each other's pasts as things that you are now discovering about each other's character -- give the relationship a "I have always been here before" feeling ). This practice has all sorts of sideshows to it, different kinds of pleasure coupledom can generate along the way, but this being recognized as the one irreplaceable and totally unique being for the other seems to be the essence of love as it's currently constituted ideologically.

But if you are ideologically committed to the postulate of artificial intelligence and insist that robots will be able to do anything humans can do, as David Levy does in his 2007 popular-science tract Love and Sex With Robots, this conception of love presents problems. Robots might be equipped to recognize your uniqueness, but there will never be anything irreplaceable and nonreplicatable about the robot.

What's required to make human-robot love conceivable is a redefinition of love to suit the model of programming. So, drawing on psychological research, Levy reduces love to an object of science that can then be analyzed at the level of abstraction and generalities suitable for transformation into code. He identifies what he deems the universal causes of love (there are 10 of them, including "desirable characteristics of the other" and "filling needs"), presents some survey data that purports to reveal what people get out of being in love and what traits they like in partners, and then concludes that all of this data can be used to program robots that can love us according to this scientifically certified checklist.

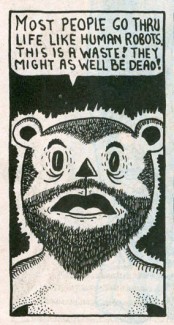

This whole method seems incredibly obtuse. The way science is used to frame love as a problem to solve constructs love as something no humans would recognize as such. The method assumes that humans are already robots themselves, not merely susceptible to being programmed but also articulate about precisely what programs they want to run. Love is not dynamic, not something discovered in a unique relation's unfolding, but a static object lodged in each individual's consciousness. Unlike Lou Gramm, I already know what love is, and I want only for someone or, as Levy would have it, something to elicit those set responses in me.

But no one breaks out hierarchical rankings for the qualities they appreciate in their beloved, no one simply stacks their partner up against a checklist of desirable duties performed; the sort of scorekeeping that goes on in a relationship is far more complicated than that. But Levy blunders along, insisting that if humans say they want x, y, and z from a relationship, and robots can do x, y, and z, then humans will love robots -- and vice versa, since by his logic, if a human thinks a robot loves him, than that robotic love is a fact and that establishes what the robot "feels". It's akin to thinking that since reading about Anna Karenina made you feel something, that Anna Karenina is therefore a real person and intended to make you feel that way. (Presumably this is precisely what some OOO partisans argue? I think I am too ignorant to understand what they are talking about.)

Levy's insistent anthropomorphizing of robots based on how humans see them was incredibly frustrating to read, but it seemed to stem from his dogmatic intention of granting robots of the future genuine agency.

It makes no sense to imagine robots with the agency to choose to love us. But if we stop thinking of robots as potential human surrogates and start thinking of them as something more akin to an engrossing novel or TV show -- a medium -- then it's easy to imagine people dating robots. People read alone, they watch TV alone, they play games alone -- why wouldn't they enjoy the immersive experience a robot could be programmed to provide alone? Especially if the experience prompts us to forget our aloneness.

Being with a robot wouldn't make us any less alone, but that is not really relevant, unless you believe that people are not to be trusted in choosing what sort of entertainment they want and that when they suspend disbelief, they are in danger of suspending it for good. People may fall in love with robots in the future, but this will be no different from falling in love with characters in books, or with books themselves.

Rather than regard them as not quite adequate humans, robots can be seen as immersive, 3-D novels. We are not convinced of their genuine emotionality so much as we suspend disbelief about their fundamental lack of it, and this itself is satisfying, this brings pleasure we might call love if it lasts long enough.

When novels first became a commercial product, there was a fairly prevalent fear that they were addictive, and the weak-minded (typically women) would be vulnerable to losing themselves completely to them, as though they had no control over their ability to invest themselves imaginatively. For some critics, every act of vicarious identification was seen as morally corrupting. Thus such critics would argue that women needed to be protected from vicarious experience for their own safety.

(image via)

(image via)Something similar seems to be going on when researchers like Sherry Turkle fret about people forgoing their own humanity and choosing robotic affection. This seems to stem from the same revulsion at other people's imaginative investments. I fall into this trap a lot; I'll believe myself capable of all sorts of imaginative acrobatics and vicarious projections and simultaneous identifications and resistant readings for pleasure, whereas other people are only capable of the most literal sort of engagement with entertainment. In my mind, I turn them into robots who are programmed by the text.

Suspending disbelief sometimes can mean becoming an emotional robot. You put up a dating profile to attract search traffic, you imagine it could be a code that expresses precisely what you want from a dating experience and will somehow bring it into fruition: the algorithms will work their constitutive magic and produce this person who is precisely what you ordered, someone who fits the genre. And you can conduct the entire relation with that someone within those generic boundaries, according to the script you put out there, or the script that exists for first dates, or whatever seems most comfortable or expedient. If we perfect online dating, we won't need robot lovers because the dating platform will roboticize us.

But there's another way to suspend disbelief as well that's not about passive obedience and surrendering to a script. Entertainment gets us to pursue the suspension of disbelief as a pleasurable end in itself, because we trust that the scripted, produced nature of the entertainment product will protect us from the radical vulnerability of dropping our guard.

If we suspend disbelief outside the script, however, if we resist the temptation (the defense mechanism, really) of repackaging our experiences in terms of entertainment, then suspending disbelief can become not an end but a means. It can open us unconditionally and carry us toward something terrifyingly unpredictable, something beyond what's expected or what we planned for, something that's much more like love -- the realization that another person is not like a robot at all and can't be programmed and that they are there with you in an ineffable moment of presence for reasons that will forever remain uncoded, maybe for no reason at all.