In addressing the insecurities of postmodern thought, Big Data falls prey to some of the same issues of interpretation

Big Data fascinates because its presence has always been with us in nature. Each tree, drop of rain, and the path of each grain of sand, both responds to and creates millions of data points, even on a short journey. Nature is the original algorithm, the most efficient and powerful. Mathematicians since the ancients have looked to it for inspiration; techno-capitalists now look to unlock its mysteries for private gain. Playing God has become all the more brisk and profitable thanks to cloud computing.

But beyond economic motivations for Big Data’s rise, are there also epistemological ones? Has Big Data come to try to fill the vacuum of certainty left by postmodernism? Does data science address the insecurities of the postmodern thought?

It turns out that trying to explain Big Data is like trying to explain postmodernism. Neither can be summarized effectively in a phrase, despite their champions' efforts. Broad epistemological developments are compressed into cursory, ex post facto descriptions. Attempts to define Big Data, such as IBM’s marketing copy, which promises “insights gleaned” from “enterprise data warehouses that implement massively parallel processing,” “real-time scalability” and “parsing structured and unstructured sources,” focus on its implementation at the expense of its substance, decontextualizing it entirely . Similarly, definitions of postmodernism, like art critic Thomas McEvilley’s claim that it is “a renunciation that involves recognition of the relativity of the self—of one’s habit systems, their tininess, silliness, and arbitrariness” are accurate but abstract to the point of vagueness.

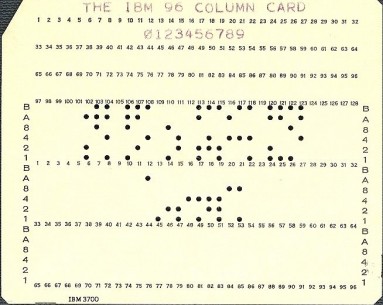

Just as a richer definition of postmodernism would need to span an array of scientific, historical, and philosophical trends — the demise of certainty in European values, the zenith of the modernist myth of the artist and the retreat of form, the rise of the idea in aesthetics at the expense of execution, the crisis of rationality brought on by World War II, the Cold War and the imminent threat of nuclear annihilation — a definition of Big Data would have to assimilate the roots of information theory, the rise of networked computing, e-commerce, cloud computing and enterprise software, the open-source movement, the emergence of the prosumer, mobile phones, noSQL data architecture, and MapReduce, or the various technologies now used by applications to handle ever more frequent data access.

Though both are projects that address positions about empiricism and meaning making, postmodernism and Big Data are in some senses opposites: Big Data is an empirically grounded quest for truth writ large, accelerated by exponentially expanding computing power. Postmodernism casts doubt on the very idea that reason can unearth an inalienable truth. Whereas Big Data sees a plurality of data points contributing to a singular definition of the individual, postmodernism negates the idea that a single definition of any entity could outweigh its contingent relations. Big Data aims for certainties — sometimes called "analytic insights" — that fly in the face of postmodernist doubt about knowledge. Postmodernism was confined to the faculty lounge and the academic conference, but Big Data has the ability to dictate new rules of behavior and commerce. An e-commerce outfit is almost foolish not to analyze browsing data and algorithmically determine likely future purchases, or as Jaron Lanier put it in Who Owns the Future, “your lack of privacy is someone else’s wealth.”

Still, both, in their own way, are responses to predicaments brought about by too much data. Too unstructured, too voluminous, too variegated, all being created at far too fast a rate — a velocity at once both off-putting and cheered. Unsurprisingly, Big, like post, is a relative designation.

***

Database administrators and data scientists define Big Data with a simple but useful litmus test: Big Data occurs when an organization has more data that it can reasonably process and store. This relates to the point at which an organization’s data set requires a parallel-programming model — distributing tasks concurrently over many computers. But what is the point at which the size of a cultural data set becomes unmanageable for traditional agents? It depends on historiographical traditions and the logic of Western humanism.

Postmodern relativism was a cultural crisis instigated by too much data, as the volume, variety, velocity, and veracity of cultural inputs expanded. The arguments about contingency that animated poststructuralism, literary theory, feminist theory, and the postcolonial were each in their own way a declaration that the way we received, stored, and analyzed data was ignorant of and insufficient for entire sections of cultural production. That is, the universal set of values, the agreed-upon teleology of Western thought posited by modernism, was a fiction; instead there are a multiplicity of means for establishing truth, an infinite number of potential cultural destinies.

When too many types or new sources of data suddenly hit a system and there are not enough tools or schemas to deal with them, the first reaction is to deny the agency of the sources of the data, and then, for a brief period, to suspend meaning making until new tools are built to handle the new volume. As went modernism, so too went our data: both grew too big to be handled on a single server.

In response, new producers, new distribution mechanisms, and new models of discourse were established to accommodate this newly broadened sense of historical possibility. The “total history” of the mid-20th century Annales School operated with a “ground up” paradigm; now, when a marginalized social group competes on the historical stage, the relevance is coming to be meted out in binary code. Datasift, a Big Data analytics company that promises to “power any decision with social data,” refers to its social media crawling collection and analytics platform as “Historics.” What once was latent in a postmodern denial of historical agency is now manifesting itself in the ways that both the consumer subject and the historical subject are enmeshed in data-collecting communication networks built for precise analysis and control. The conditions that generated postmodernism were an intellectual half-step toward the logic that permits the hegemony of networked computing — the era of grappling with Big Data.

***

Information theory helped prompt the crisis of modernism in visual art. From the mid-20th century on, artists were finding new points of cultural exchange: Dennis Oppenheim’s earth works, Chris Burden’s body, Robert Barry’s “Closed Gallery Piece,” and Sol Lewitt’s instructions each created new problems for the monolithic structure that modernism had erected. Whether you look to the rise of conceptualism in the 1960s, Harald Szeemann’s sprawling “When Attitudes Become Form” (1969), Jack Burham’s System Esthetics (1968), or the writings and practice of Lucy Lippard, it's clear that many artists and critics came to distrust the singular art object and its relation to history and the market. High Modernism, and its critical structure, was fast becoming a legacy database.

We might term this a crisis of an object-oriented data model that stored information only on the basis of a central and deterministic principle. In System Esthetics, Burnham observed that the function of art was no longer held by material objects but instead resided in “relations between people and the components of their environment.” For Burnham, “in an advanced technological culture the most important artist best succeeds by liquidating his position as artist vis-a-vis society.”

Thus the conceptualists turned to the transmission of information as their subject. Referring to the nascent conceptual practice of his time (Dan Flavin, Robert Morris, Robert Smithson, Carl Andre, and Les Levine), Burnham noted that rise of information primacy in art “has no critical vocabulary for its defense.” All attempts to defend it with modernist logic would amount to limiting its scope and imposing the teleological “objective” value system of high modernism on it.

As a result, the definitions of acceptable inputs not only changed, but the notion that we would all agree on a model for the analysis of cultural production — on critical criteria — has been rethought. Big Data already operates from this apparently postmodernist logic. Hadoop, a leading Big Data technology, is referred to by some a “framework of tools” that is “open source,” seemingly borrowing an ethics from a variety of poststructuralist shibboleths. 10gen, the developers of the noSQL database technology MongoDB, points out that while a “SQL database needs to know what you are storing in advance” NoSQL databases are “built to allow the insertion of data without a predefined schema.” MongoDB claims that “document-oriented and object-oriented databases are philosophically more different than one might at first expect”: "Objects have methods, predefined schema, inheritance hierarchies” yet in a noSQL framework, these are de-emphasized.

In 1968, Burnham presaged the shift from an object-oriented to systems-oriented criteria. Fundamental was the way in which he saw information systems supplanting the aesthetic object. In a fully formed system aesthetics, he argued, “judgment demands precise socio-technical models.” The project of Big Data, though still largely undefined, might be viewed as a “socio-technical” model built on the intellectual soil of systems-oriented culture but also on the ethical basis of a centerless postmodernism that thrives in a subject-less vacuum. Postmodernism, likewise, might be understood as the period in which an efflorescence of unstructured, non-teleological, non-narrative inputs hit with scarcely a strain of intellectual or technical tools to digest it.

Today, digital media, even at its most elemental level, is always about selection. One is never creating anew but manipulating signals, manipulating data. Systems Esthetics links to Big Data in that it echoes Lev Manovich’s claims that a new media aesthetic is constructed around the idea that the “natural” authorial act is now one of aggregating and modeling data elements. Big data apes such historiographical imperatives. Like the data scientist, a critic or artist does not create or dictate cultural meaning as "wrought out" of the author’s original object but instead observes a continuous spectrum of information. The radical act of the modernist archive is a now a Hadoop cluster.

Thus a newly empowered subjectivity enters on the heels of voluminous, variegated, and disparate data inputs. The ideology of Big Data — that capitalists can and should mine a massive, schema-less trove information that we produce for patterns — is implemented through the discourse of e-commerce, social media, comments, video, and log files. By these means, capitalism rushes to the oracle of difficult-yet-omnipresent data to unlock and replenish the faith in a universal certainty and meaning. Judgment and certainty can then return to art only as networked aesthetic discourse — as the output of Big Data–style analysis. Hermeneutics become statistics. A critic’s opinion can be modelled, predicted, and exposed for its cultural contingencies and biases: Just map their browsing history, show a histogram of authors they cite by age, race, and sex. Analytics permits few myths since narrative scarcely survives reams of data.

The critical intelligentsia thus finds itself at a crossroads. It can fully abandon the promise of enlightenment certainty and modernist value systems, but we should also consider what a full embrace of networked critique entails. Crowdsourcing the humanities means redefining social relations that have for centuries established their apparent value. Wikipedia isn’t earning anyone tenure. Yet the alternative to Big Dataism is to double down on an impoverished and nonexistent value system. Shouldn’t humanists follow the technophiliac intelligentsia and “look at the data”?

In the transition from an object-oriented to systems-oriented culture that Burnham championed, he saw change emanating “not from things, but from the way things are done." We may have chosen the worst of both worlds: extending the myth of the fetishized art object (supported by the art market) while simultaneously embracing network discourse and algorithmic evaluation.

Big Data might come to be understood as Big Postmodernism: the period in which the influx of unstructured, non-teleological, non-narrative inputs ceased to destabilize the existing order but was instead finally mastered processed by a sufficiently complex, distributed, and pluralized algorithmic regime. If Big Data has a skepticism built in, how this is different from the skepticism of postmodernism is perhaps impossible to yet comprehend.