Save the World

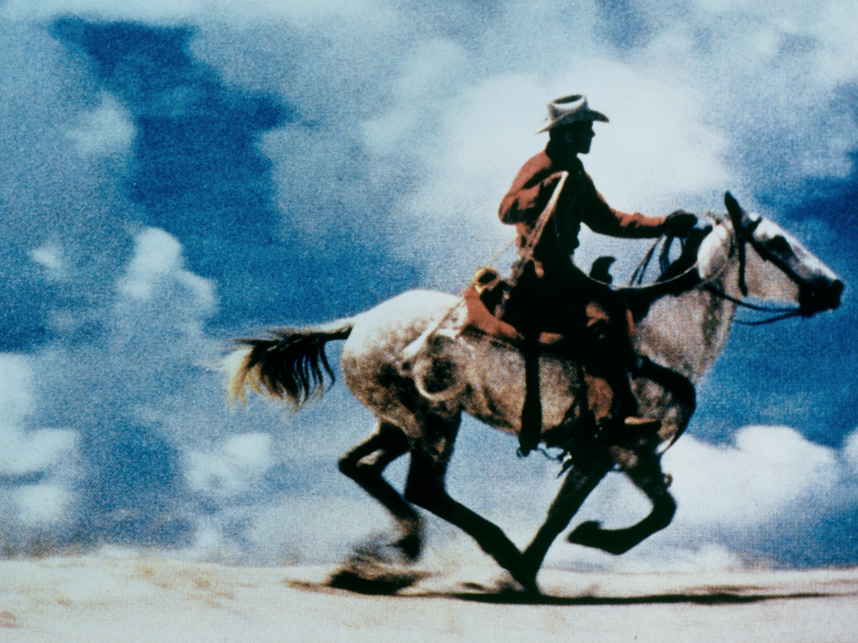

How Star Wars films thematize their own irrelevance

Ariana Reines's poem "Save the World" describes, among other things, the experience of going to the cineplex to see a science fiction blockbuster. The lines quoted below come as the speaker of the poem is watching the movie: I can see that they intend to do this I can see that they think We deserve this We should be punished We must want what they are punishing us For wanting They are giving it to us And they are definitely doing it in this way At such length In these colors Because they mean to It is clear to me They are disgruntled in Hollywood They are blaming us For what must be their grave Disappointment and sorrow Having recently been at a cineplex myself to watch a science fiction blockbuster, I could relate to this. We went to see The Last Jedi last week, out… Read More...